The World Health Organization (WHO) is advocating for cautious utilization of large language model tools (LLMs) generated by artificial intelligence (AI) to safeguard and advance human well-being, safety, autonomy, and public health.

The World Health Organization (WHO) is advocating for cautious utilization of large language model tools (LLMs) generated by artificial intelligence (AI) to safeguard and advance human well-being, safety, autonomy, and public health.

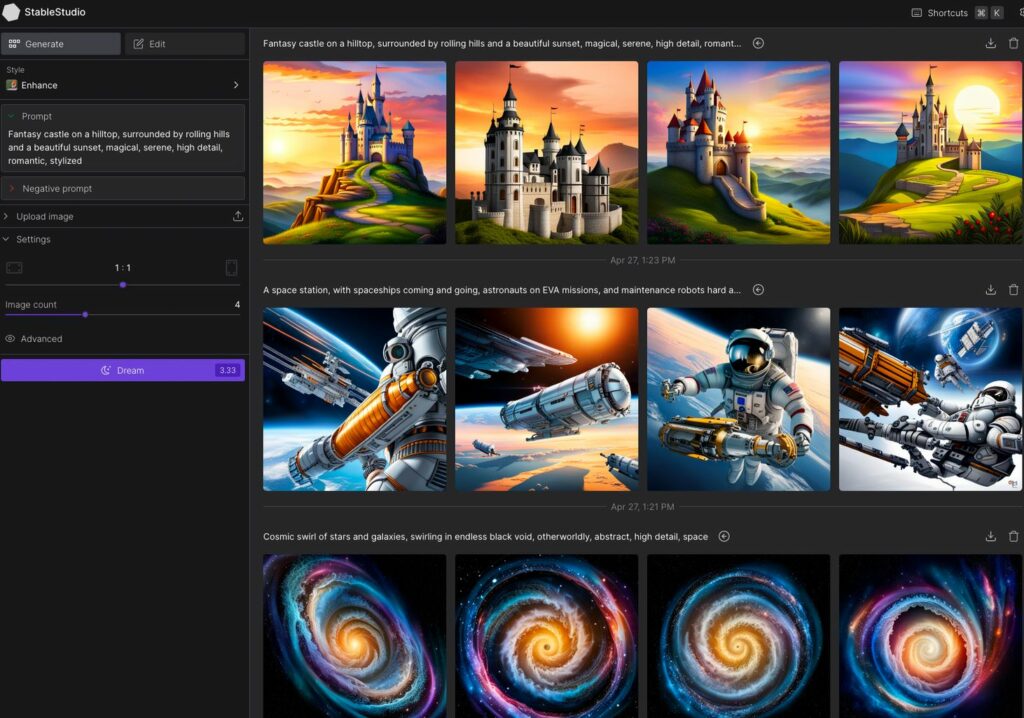

These LLMs that power platforms like ChatGPT, Bard and others, which mimic human understanding, processing, and communication, have rapidly gained popularity and are being experimentally employed to cater to various health-related needs.

According to WHO, it is essential to thoroughly examine the risks associated with employing LLMs to enhance access to health information, as decision-support tools, or even to augment diagnostic capacities in under-resourced settings, in order to protect people’s health and reduce disparities.

While WHO recognizes the potential benefits of utilizing technologies like LLMs to support healthcare professionals, patients, researchers, and scientists, there is concern that the cautious approach typically adopted with new technologies in the health sector is not consistently applied to LLMs.

This involves upholding “key values such as transparency, inclusivity, public engagement, expert supervision, and rigorous evaluation.”

Hasty adoption of untested systems could result in errors by healthcare workers, harm to patients, erode trust in AI, and consequently hinder or delay the long-term potential benefits and applications of such technologies globally.

Key concerns necessitating rigorous oversight for the safe, effective, and ethical utilization of LLMs include:

– Scrutinizing the potential bias in the data used to train AI, which may generate misleading or inaccurate information, posing risks to health, equity, and inclusiveness.

– Acknowledging that LLM-generated responses may appear authoritative and plausible to end-users, yet they could be entirely incorrect or contain serious errors, particularly regarding health-related inquiries.

– Considering that LLMs may be trained on data without obtaining prior consent for such usage, and may not adequately protect sensitive data, including health-related information, provided by users for generating responses.

– Recognizing the potential misuse of LLMs to generate and disseminate highly persuasive disinformation, whether in textual, auditory, or visual formats, making it challenging for the public to discern reliable health content.

– While WHO remains committed to harnessing new technologies, policymakers are advised to prioritize patient safety and protection while technology firms work towards commercializing LLMs.

WHO proposes addressing these concerns and obtaining clear evidence of the benefits before extensively implementing LLMs in routine healthcare and medicine, whether by individuals, care providers, or healthcare system administrators and policymakers.

Furthermore, WHO emphasizes the significance of adhering to ethical principles and implementing appropriate governance, as outlined in its guidance on the ethics and governance of AI for health.

WHO identifies six core principles, including:

- Safeguarding autonomy

- Promoting human well-being, safety, and the public interest

- Ensuring transparency, explainability, and comprehensibility

- Fostering responsibility and accountability

- Ensuring inclusivity and equity

- Promoting AI that is responsive and sustainable