The week could not end without some major AI news.

On Thursday, Stability AI announced their newest text-to-image model, Stable Diffusion 3. They are calling it their most capable model to date, and from the examples provided, that leaves no doubt.

Not only is this open source model their most capable model, it could very well be the most capable text-to-image model, period.

It’s not open to the public yet, but you can sign up to join the waitlist.

Now, you may be thinking that text-to-image is already so good especially on something like Midjourney, that there’s no way it can get better.

Well, Stable Diffusion 3 is about to blow your mind.

First of all, it has perfect spelling, well, at least from the examples provided. They say they’re using a new type of diffusion transformer, similar to Sora, combined with flow matching.

While Midjourney and Dall-E spelling has come a long way, they still make frequent glaring mistakes. That seems to be a thing of the past with Stable Diffusion 3.

Check out these prompts and the results.

But if you think that’s impressive, SD3 will blow your mind with its ability to understand and follow prompts. Let’s face it, none of the existing models do this very well.

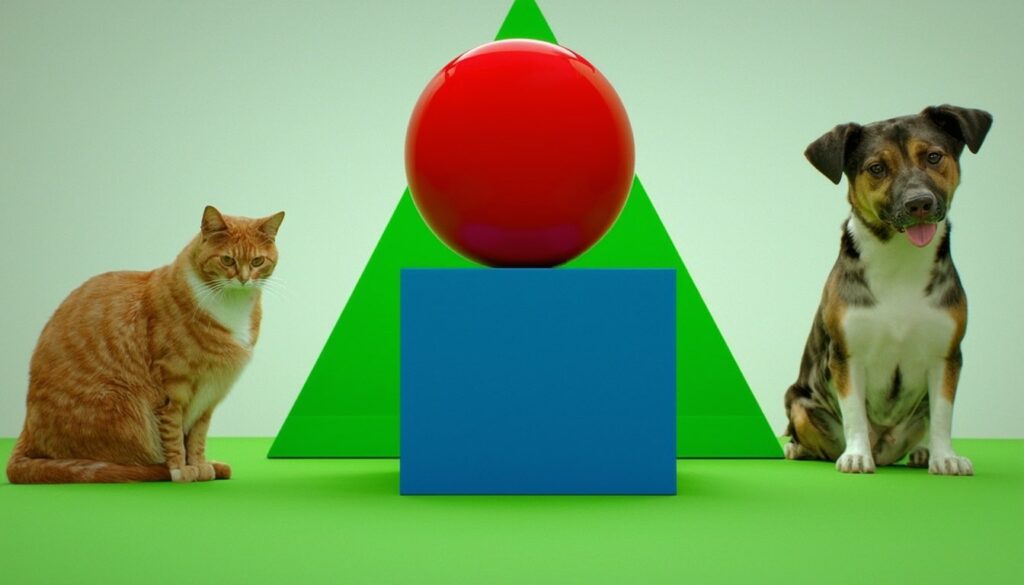

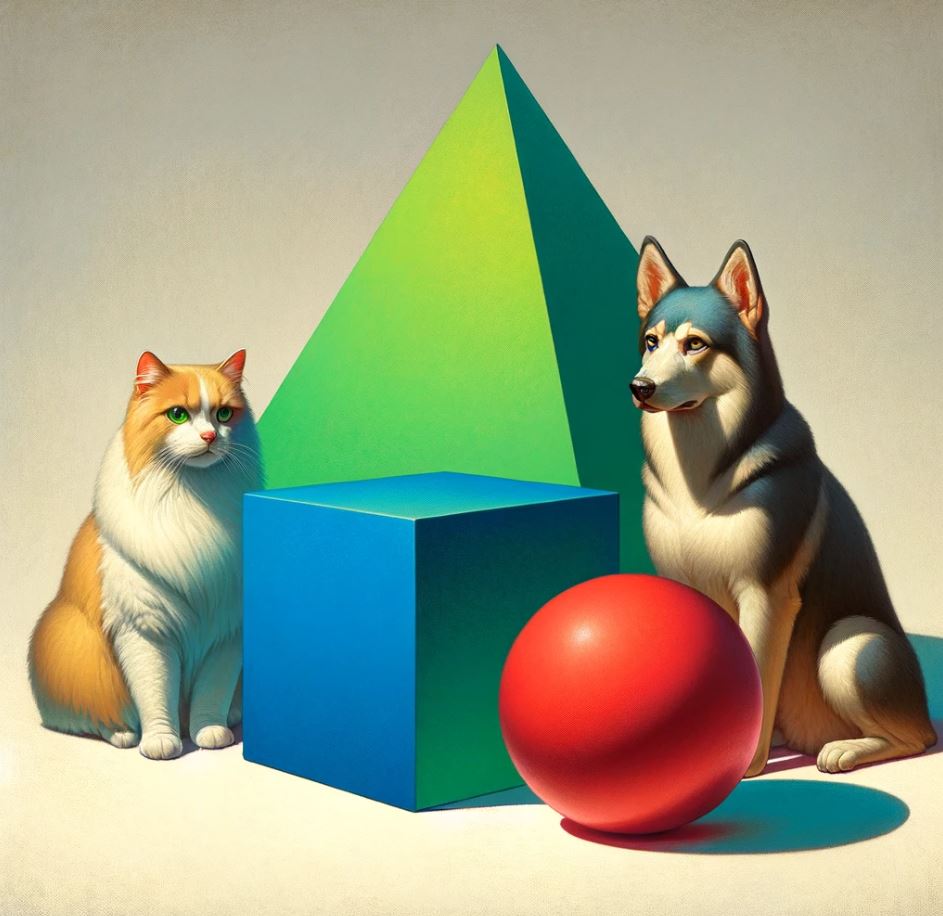

And this next example encapsulate this perfectly. The prompt is, “Photo of a red sphere on top of a blue cube. Behind them is a green triangle, on the right is a dog, on the left is a cat.”

This is the SD3 output.

As you can see, it followed that prompt exactly. Literally this is what the user had in mind when typing.

To see just how much of a leap this is in prompt understanding, I gave the same prompt to Dall-E.

This is what is spit out.

Not the worst image, but you can see there’s a problem in following the prompt. For instance, the red sphere is not on top of the blue cube. And no matter how many times I tried, it never really got it 100% once.

If these are not cherry-picked examples from SD3, this will truly be revolutionary.

Here’s another example of how well it adheres to the prompt.

And the Dall-E equivalent.

At least it did get the 90’s computer with the word ‘Welcome’, but what is that graffiti?

Here’s another one. “Night photo of a sports car with the text “SD3” on the side, the car is on a race track at high speed, a huge road sign with the text “faster”.

Literally everything is on point with SD3.

Dall-E was not too bad, but its the small details that will make the difference, especially for professionals. For instance, SD3 does not appear on the side of the car, as instructed in the prompt.

The Stable Diffusion 3 suite of models currently range from 800M to 8B parameters, and they are taking safety very seriously.

This is what they had to say, “We believe in safe, responsible AI practices. This means we have taken and continue to take reasonable steps to prevent the misuse of Stable Diffusion 3 by bad actors. Safety starts when we begin training our model and continues throughout the testing, evaluation, and deployment. In preparation for this early preview, we’ve introduced numerous safeguards. By continually collaborating with researchers, experts, and our community, we expect to innovate further with integrity as we approach the model’s public release. “

So far there’s no word on when this will be made public. I’ll be looking forward to put it to the test as soon as I get access.