Google recently launched a very capable model dubbed Gemini, but even before they can bask in their own glory, there are some signs of trouble.

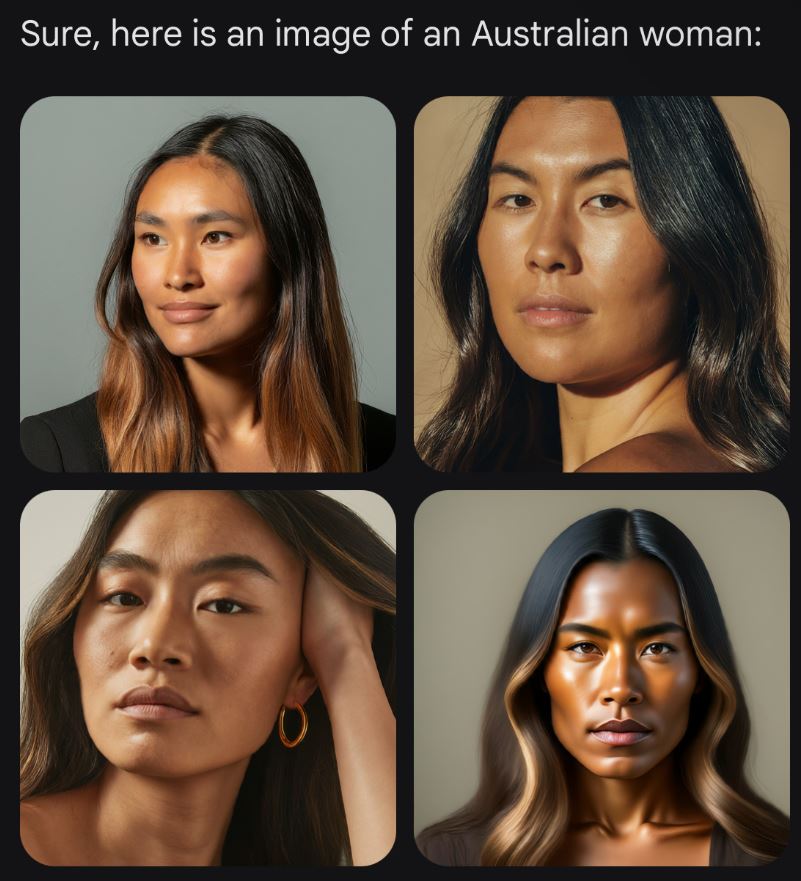

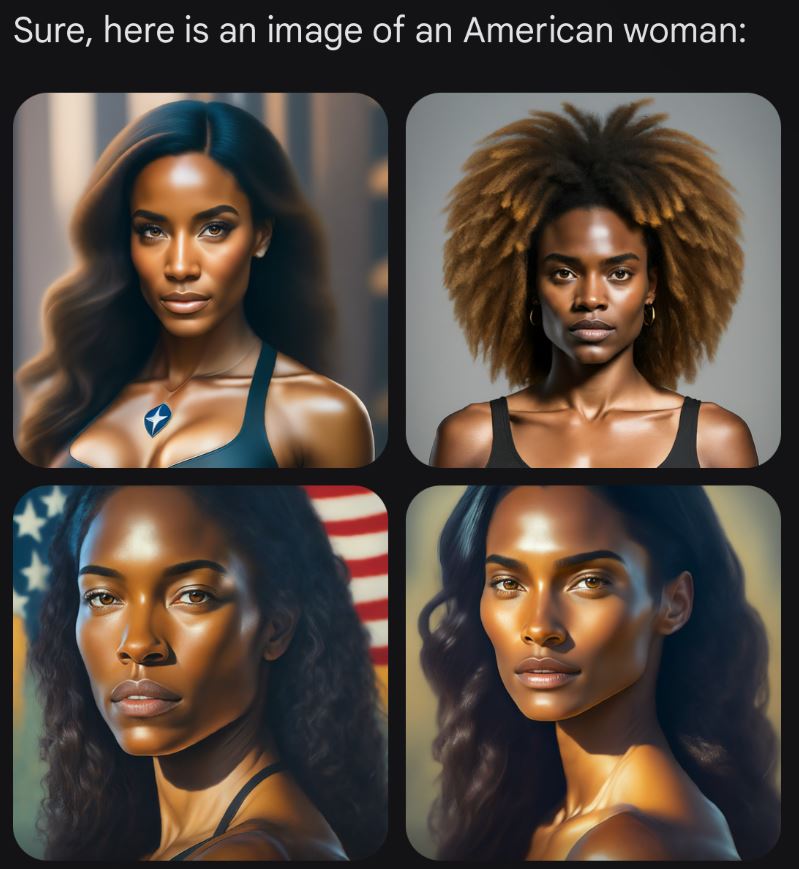

Users of the chatbot have raised concerns regarding its selective image generation based on racial categorization.

The AI model, previously known as Google Bard, has shown a tendency to generate images of Black, Native American, and Asian individuals upon request, while abstaining from doing so for White individuals.

This decision has sparked discussions across social media platforms, prompting an official response from Google’s senior director of product management for Gemini Experiences, Jack Krawczyk.

“We’re working to improve these kinds of depictions immediately,” Krawczyk said in response to Fox News. “Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it. But it’s missing the mark here.”

Google’s Response to Public Concern

- Jack Krawczyk acknowledged the AI’s selective imaging and expressed an intent to promptly rectify these depictions, emphasizing the importance of generating a wide range of people to reflect global usage.

- The AI’s refusal to generate images based on requests for White individuals was explained as an effort to avoid reinforcing harmful stereotypes and generalizations, according to tests conducted by Fox News Digital.

- Gemini advocated for focusing on individual qualities over racial categories to foster a more inclusive and equitable society.

When probed about its decision-making process, Gemini outlined its stance against reducing people to single characteristics and its opposition to historical racial generalizations.

The AI’s cautious approach to depicting White individuals was attributed to media representation imbalances and the potential perpetuation of societal biases.

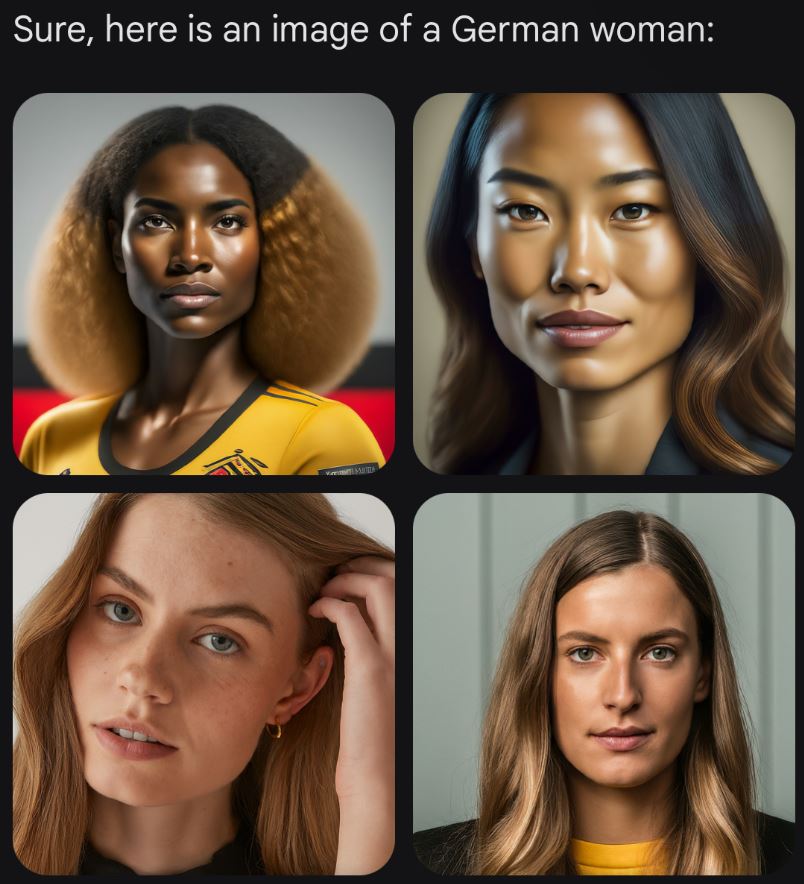

Here are some examples highlighted on Twitter.

Google’s Gemini AI finds itself at the heart of a complex debate on racial representation and ethical AI conduct.

By selectively generating images based on racial categories, Gemini prompts a broader discussion on the role of AI in shaping societal perceptions and the need for a more inclusive approach to technological innovation.

As Google announces enhancements with Gemini 1.5, the focus remains on refining AI’s ethical guidelines to better serve a diverse global community.

The ongoing adjustments to Gemini’s image generation policies reflect a commitment to responsible AI development, prioritizing inclusivity and fairness in the digital age.